Experiences

Click a box to learn more

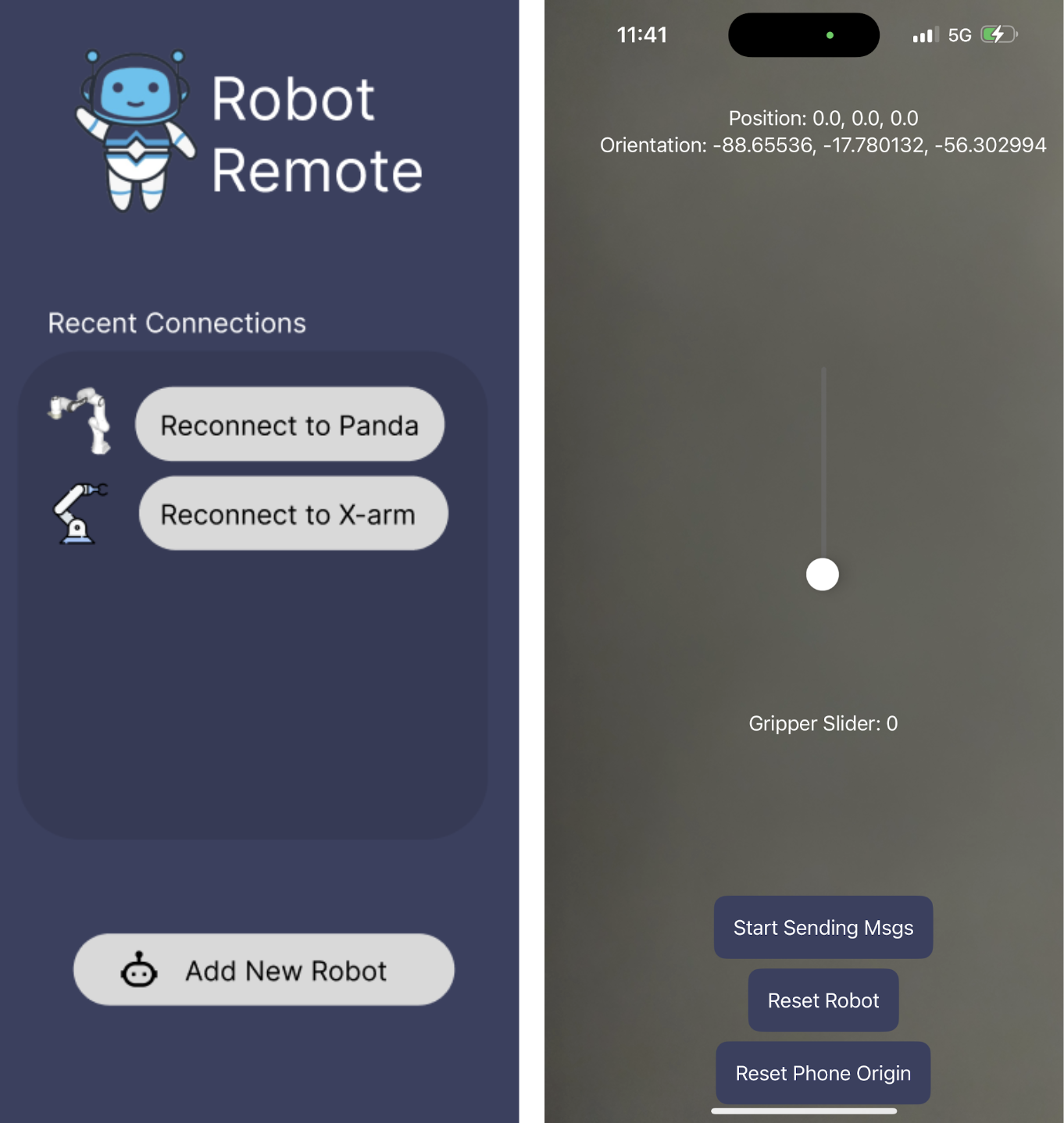

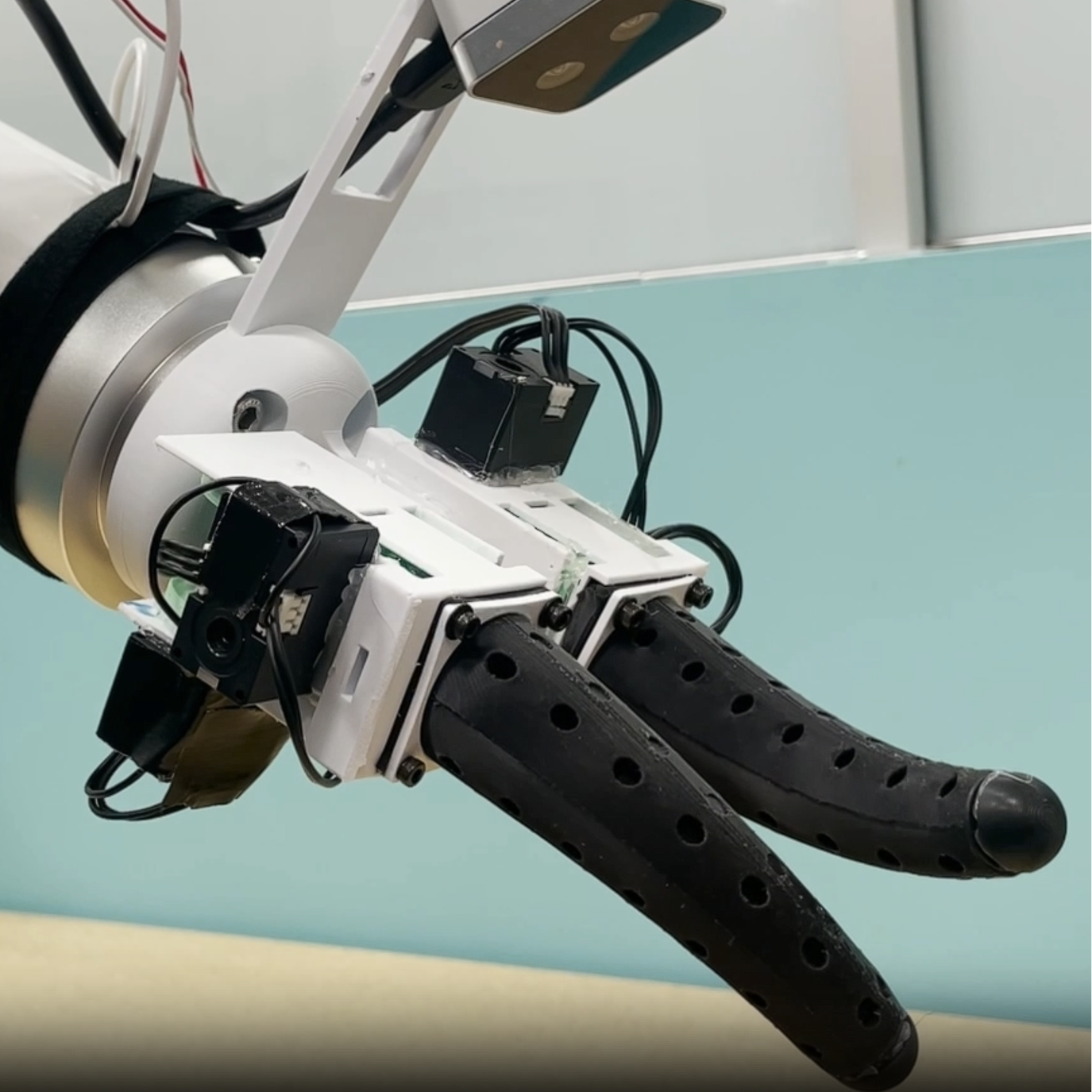

Robot Remote

Remote-control system letting users teleoperate a robot from anywhere with just their phone.

The Robot Remote Project

Robotic Teleoperation from your PhoneThe most exciting and extensive project I worked on was the Robot Remote. The Robot Remote, has the potential to reach a broad audience, it could be used in healthcare for patients with limited reach, in environments where sterile conditions are needed, or areas difficult to access.

This is a research-oriented robotic platform developed to empower users to control a physical robot from anywhere in the world. What began as a MuJoCo simulation of a robotic arm, receiving data from a phone app on a server and interacting within simple virtual environments, eventually progressed into a full robotic manipulator capable of receiving 3D coordinates from a phone’s augmented reality spatial mapping. After the simulation ran efficiently and smoothly, we added more features, such as a slider for the gripper, and created environments where the arm could interact with objects. This is where I stepped into a lead role. Our team was spread across the nation and I was the person responsible for working with the on-site robots. Using the Pinocchio library for our inverse kinematics, we converted the phone’s movements into joint configurations for the robot. As the system matured, I demonstrated our work to investors visiting CMU, giving them the chance to try the application firsthand. I also presented the project to students in my research professor’s class, which increased their interest in robotics research.

Project Leader: Karissa Barbu

Project Professor: Jeffrey Ichnowski, Ph.D.

IOS Developer: Rishan H.

Developers: Adam Hung, Dr. Christopher Timperley, Uksang Yoo

NeRFs

Neural radiance fields used to reconstruct and visualize 3D scenes with high-fidelity geometry.

Neural radiance fields

Visualizing 3D ScenesIn general, robots struggle to perceive and understand transparent or deformable objects like glass, plastic, and cloth because traditional depth cameras often fail due to the refraction, reflection, or absorption of light. This makes manipulation tasks like grasping for these objects difficult.

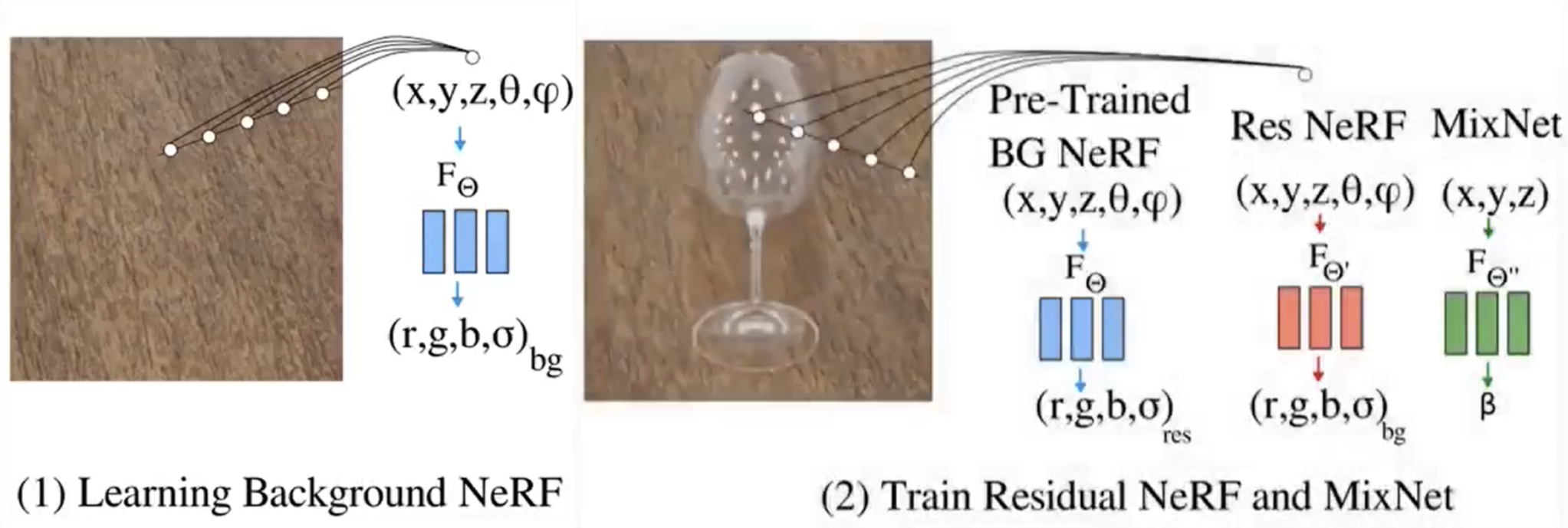

Our goal was to use Neural Radiance Fields (NeRFs) to improve robotic perception in these scenarios. NeRFs are able to reconstruct detailed 3D scenes from multi-view images, capturing details like geometry, lighting, texture, and even transparency. Using these reconstructions, we can enable robots to learn more about objects that were previously invisible or misinterpreted.

My contributions included setting up and calibrating a panoptic camera studio, collecting multi-view datasets, and supporting the design of manipulation policies for soft materials. I also configured and deployed a Yaskawa GP12 robot using ROS at the National Robotics and Engineering Center (NREC) in Pittsburgh.

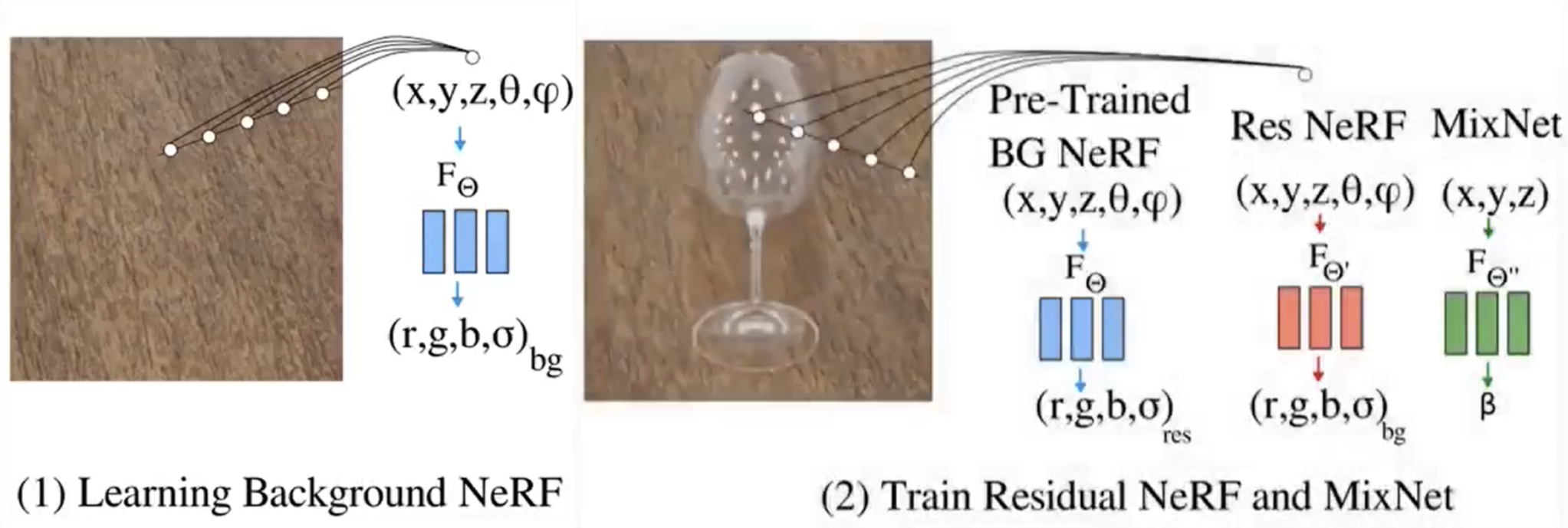

To train our models, we first created our scenes in Blender so that we could pretrain our NeRFs both on the background and then with our synthetic object scene. I worked more on Res-NeRF, building on Dex-NeRF as its precursor. For interactions between the background and transparent objects, we used a MixNet, a small neural network, that adaptively blends a background NeRF with a residual NeRF at each 3D point. This helped with rendering and reconstruction near areas where transparent objects distort or overlap with the background.

These reconstructions made it possible for robots to manipulate objects which they could not perceive through standard methods. In the current iteration of this project, the lab is focusing on Gaussian Splatting, including Clear Splatting and Cloth Splatting, for fast rendering and better models of translucent and deformable objects.

Project Leader: Bardienus Duisterhof

Project Professor: Jeffrey Ichnowski, Ph.D.

Research Assistant: Karissa Barbu

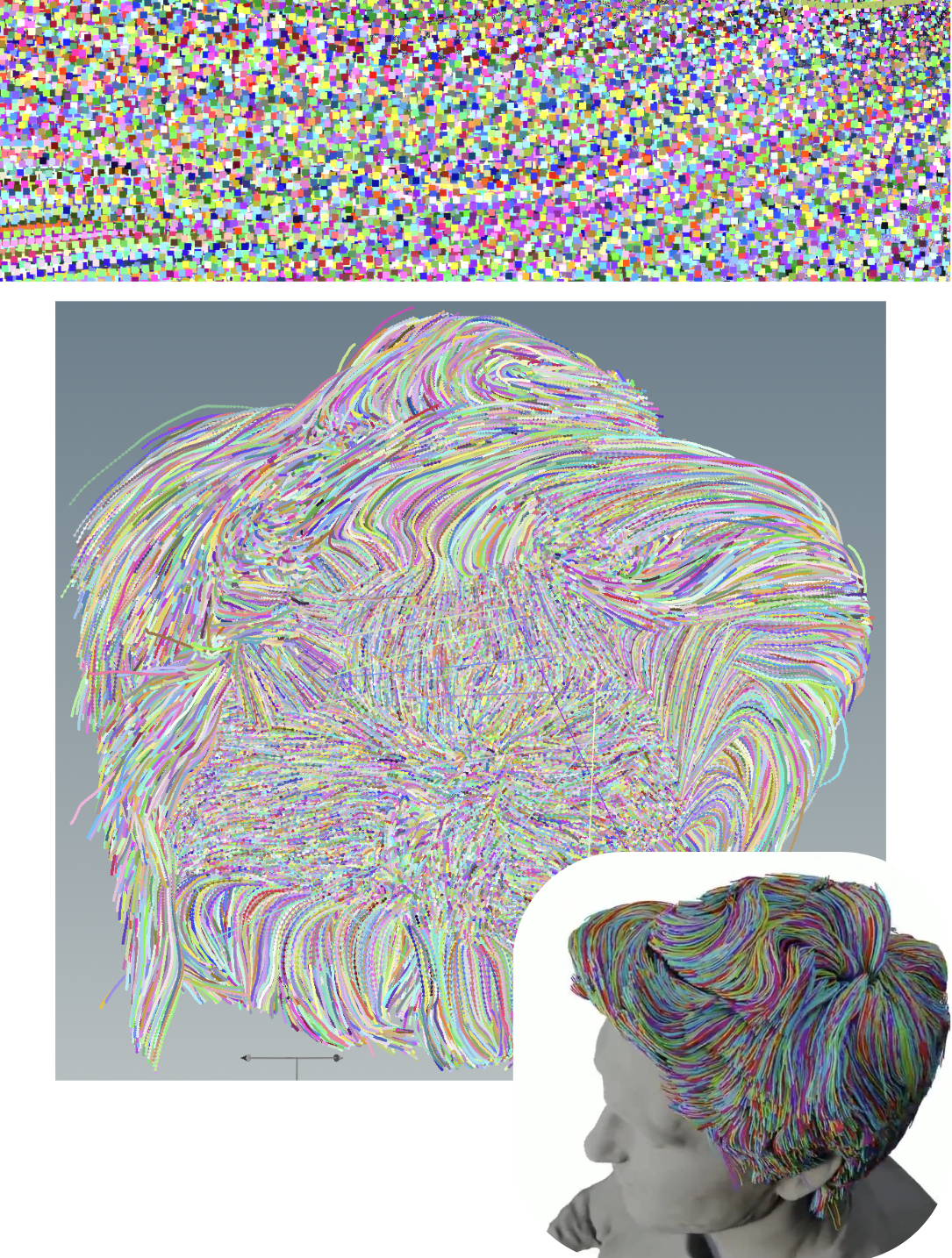

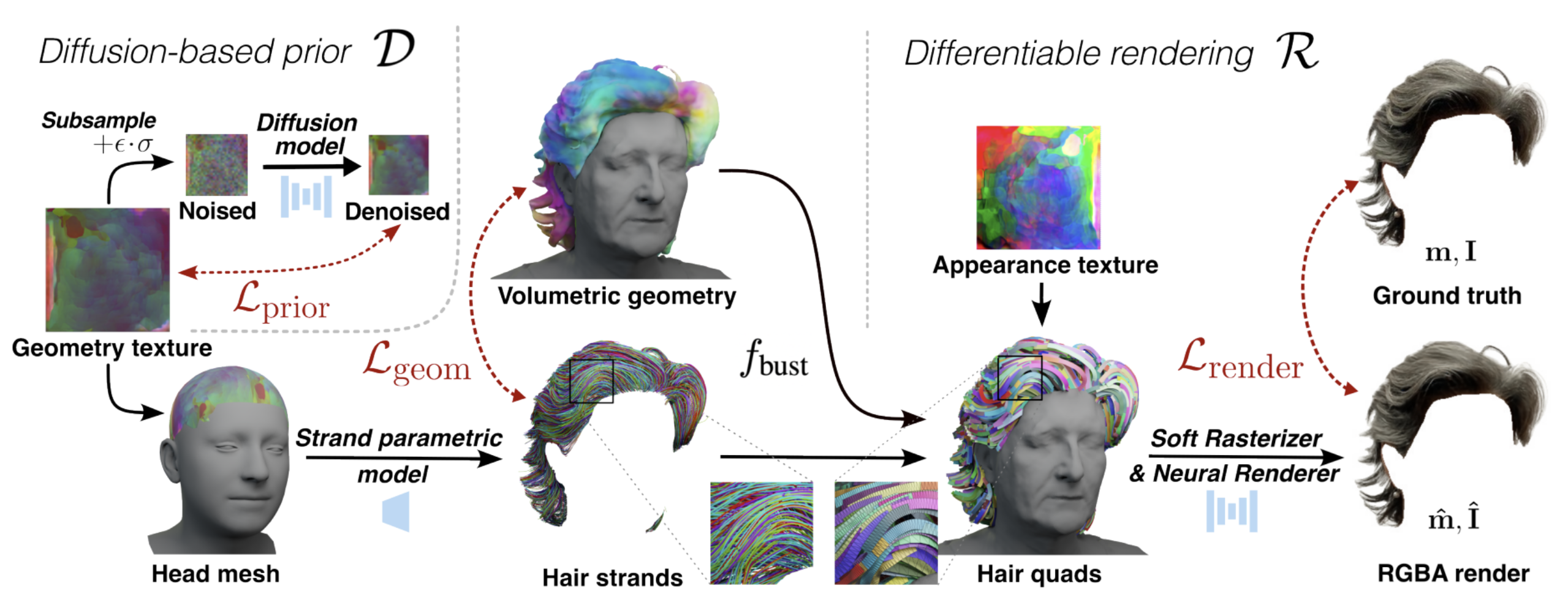

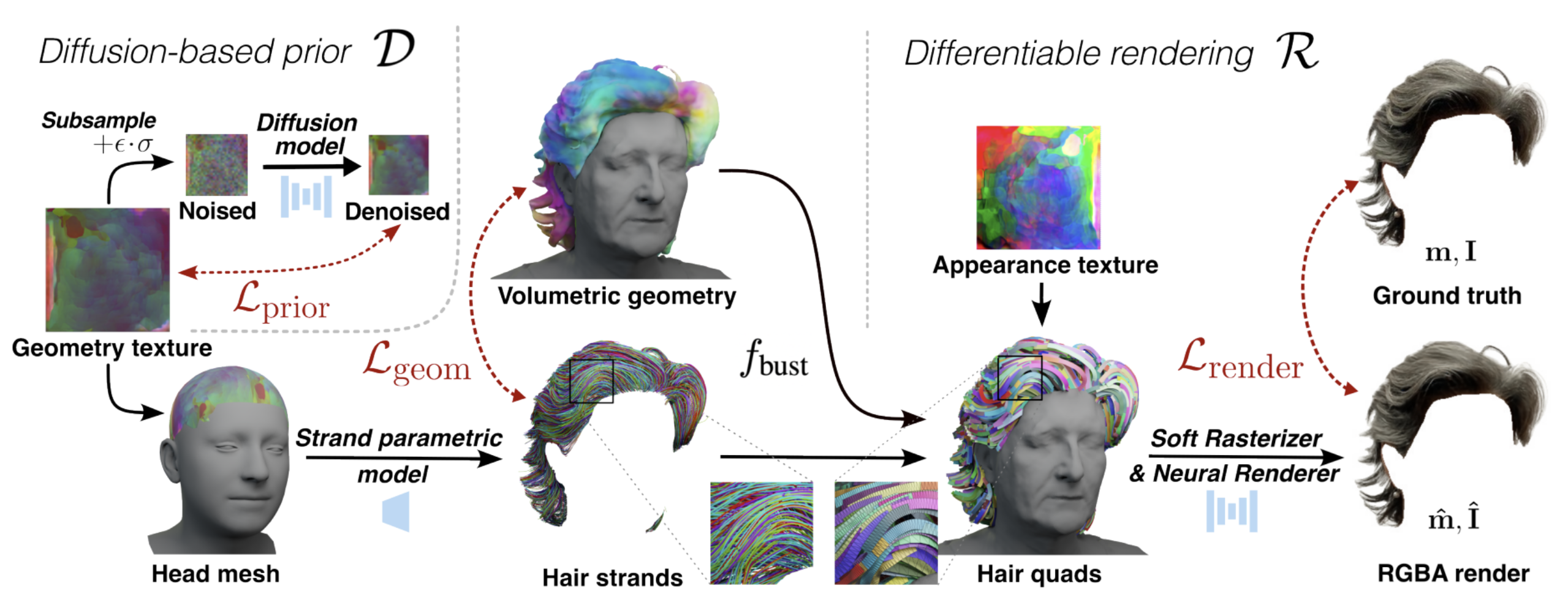

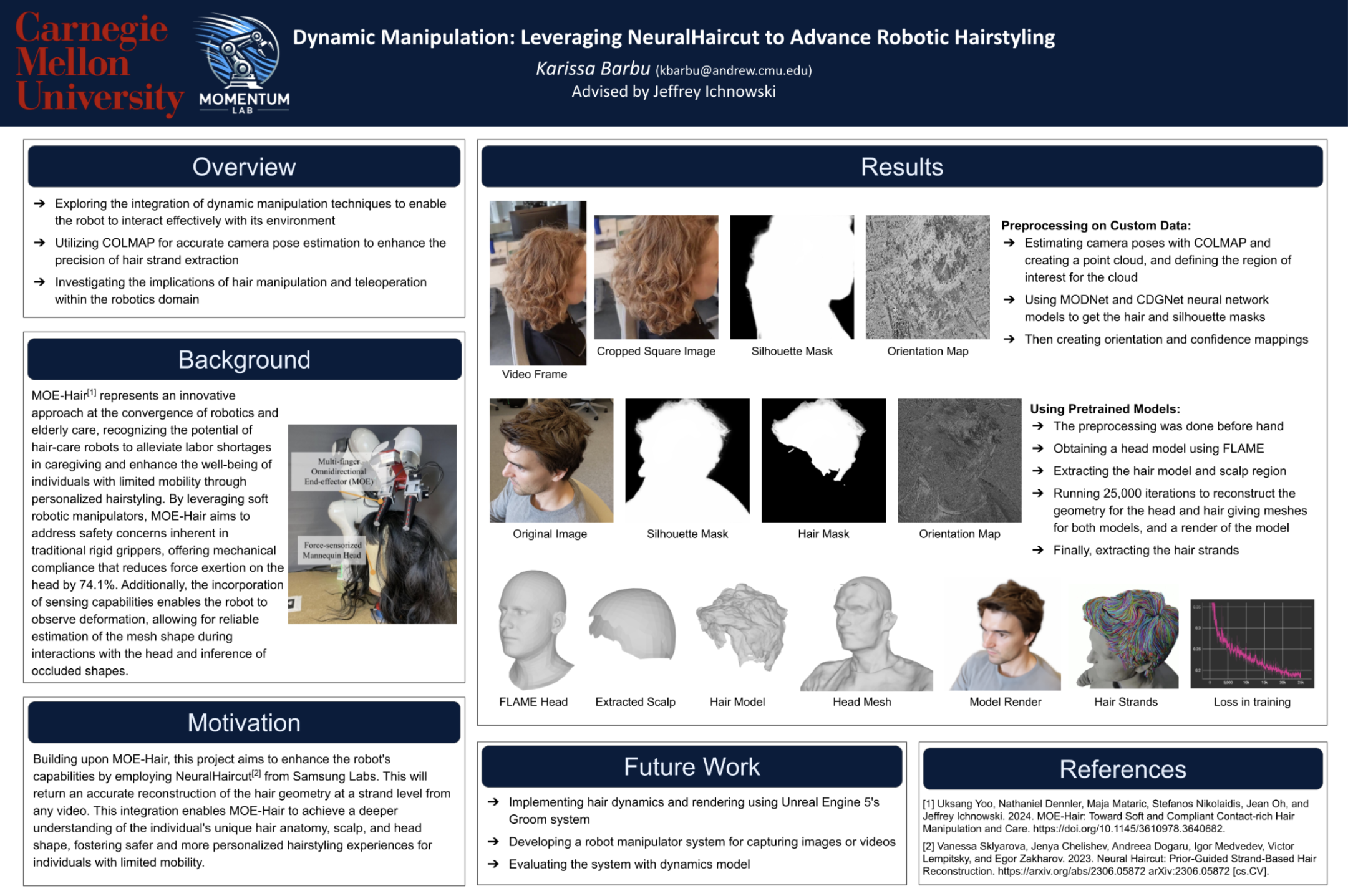

Neural Haircut

Deep model that extracts 3D hair structure from images to enable realistic, editable hair reconstructions.

Neural Haircut

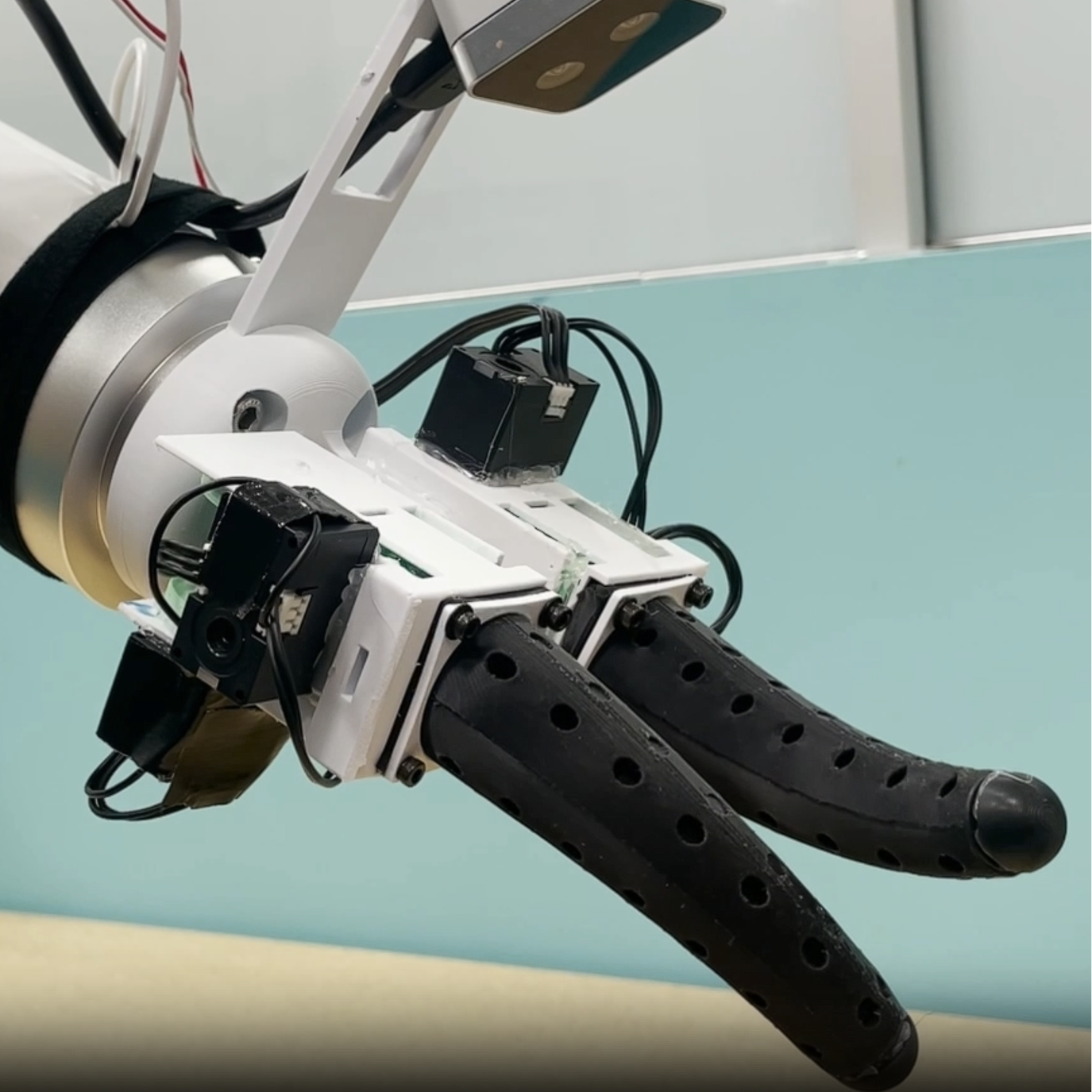

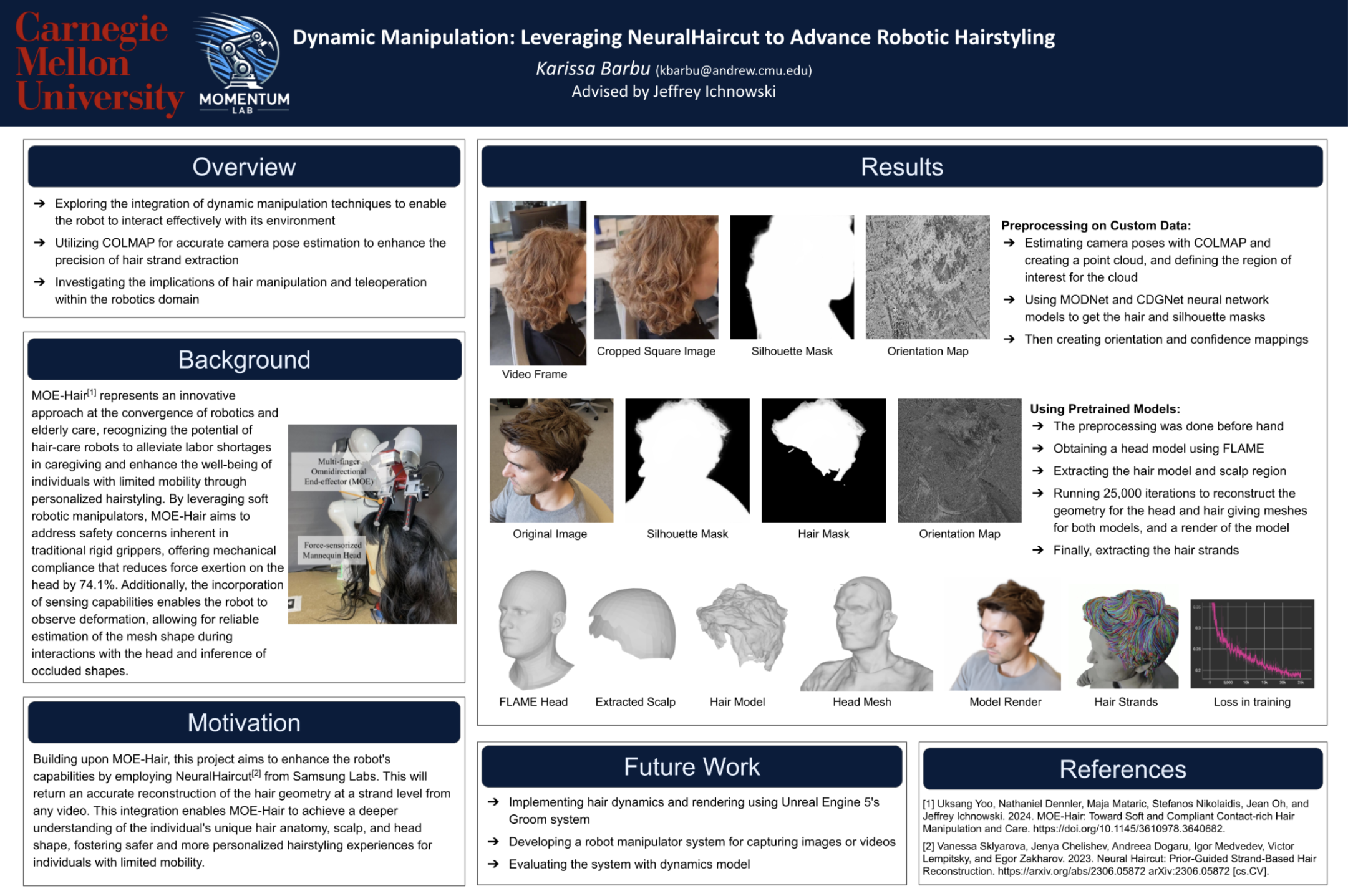

3D Hair ReconstructionAssistive robotic hairstyling requires a robot to understand human hair anatomy with high precision while remaining safe and gentle during interaction. Hair is especially challenging to model due to its fine structure, high variability, and complex physical interactions between strands.

To address this, we used NeuralHaircut, a deep learning model from Samsung Labs, to reconstruct personalized 3D hair geometry from multi-view images. Our goal was to apply this model, designing a perception pipeline that is suitable for robot-assisted hairstyling and enables a robot to reason about a person’s hair structure safely and precisely.

My contribution focused on building and extending the perception pipeline. I used COLMAP for multi-frame camera calibration and pose estimation to recover strand-level detail. I also extended NeuralHaircut using ideas from MOE-Hair, improving styling realism and adapting the model for robotic manipulation.

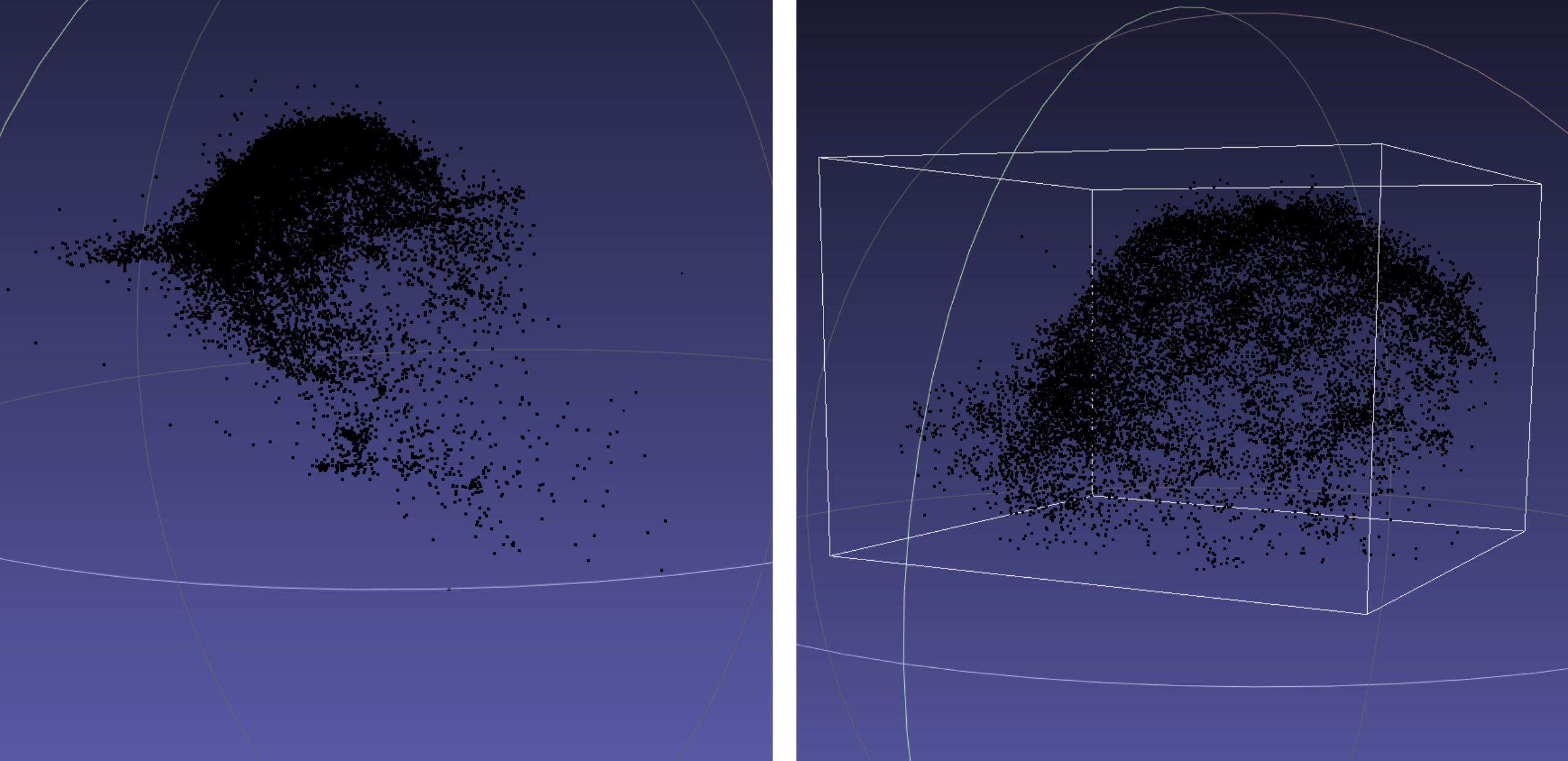

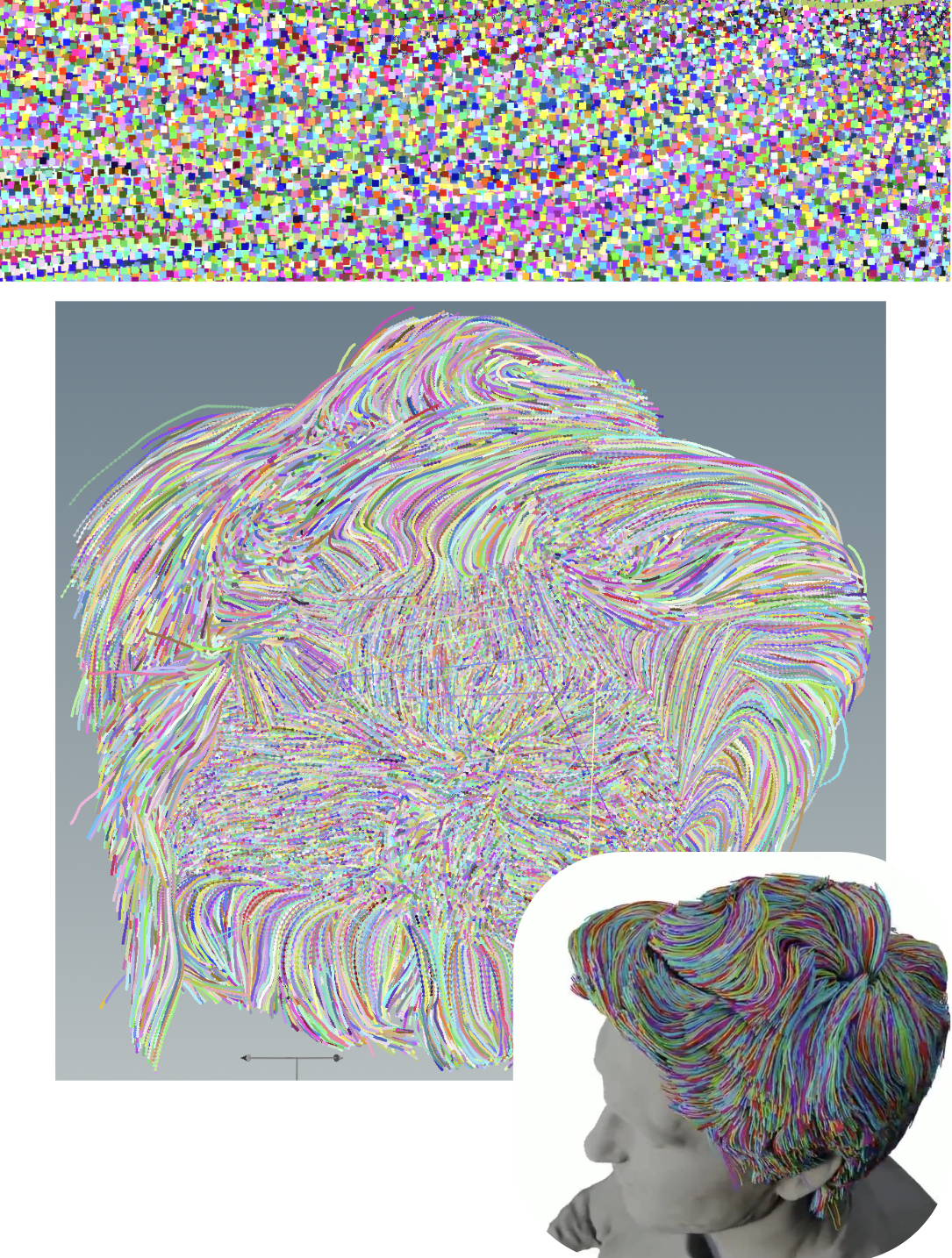

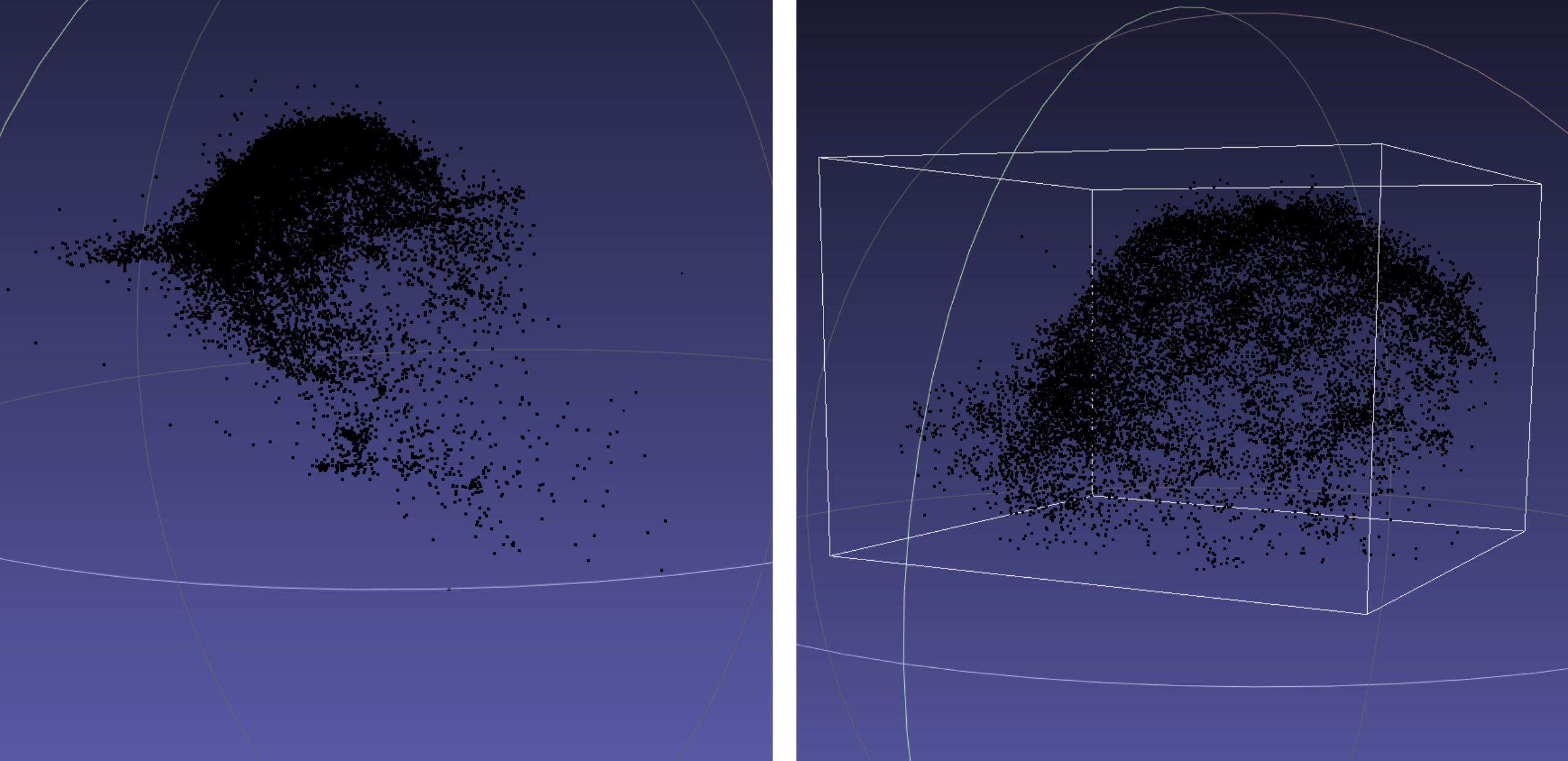

First we recorded a subject’s head using a phone camera. We then parsed and processed the frames from that video with COLMAP to generate a point cloud of the subject’s head and hair. For each frame, we extracted hair and silhouette masks, which were used to construct separate head and hair models. Individual hair strands were then reconstructed; since the strands initially formed dense point clusters, I used Houdini to convert these points into coherent strand geometries suitable for downstream manipulation.

On the manipulation side, we used MOE, a custom soft and flexible end-effector designed to safely grasp and manipulate hair without exerting excessive force on the user’s head. This made the system well-suited for assistive, human-facing tasks.

I presented this project at Carnegie Mellon University’s 2024 Meeting of the Minds, highlighting its potential for real-world assistive robotics applications.

In the current iteration of this project, the team has developed DYMO-Hair, a model-based robotic system for visual goal-conditioned hairstyling. DYMO-Hair can model how moving a single hair strand affects the surrounding hair, enabling more realistic, coordinated styling behaviors.

Project Leader: Uksang Yoo

Project Professor: Jeffrey Ichnowski, Ph.D.

Research Assistant: Karissa Barbu

Modified Point Cloud(Right)

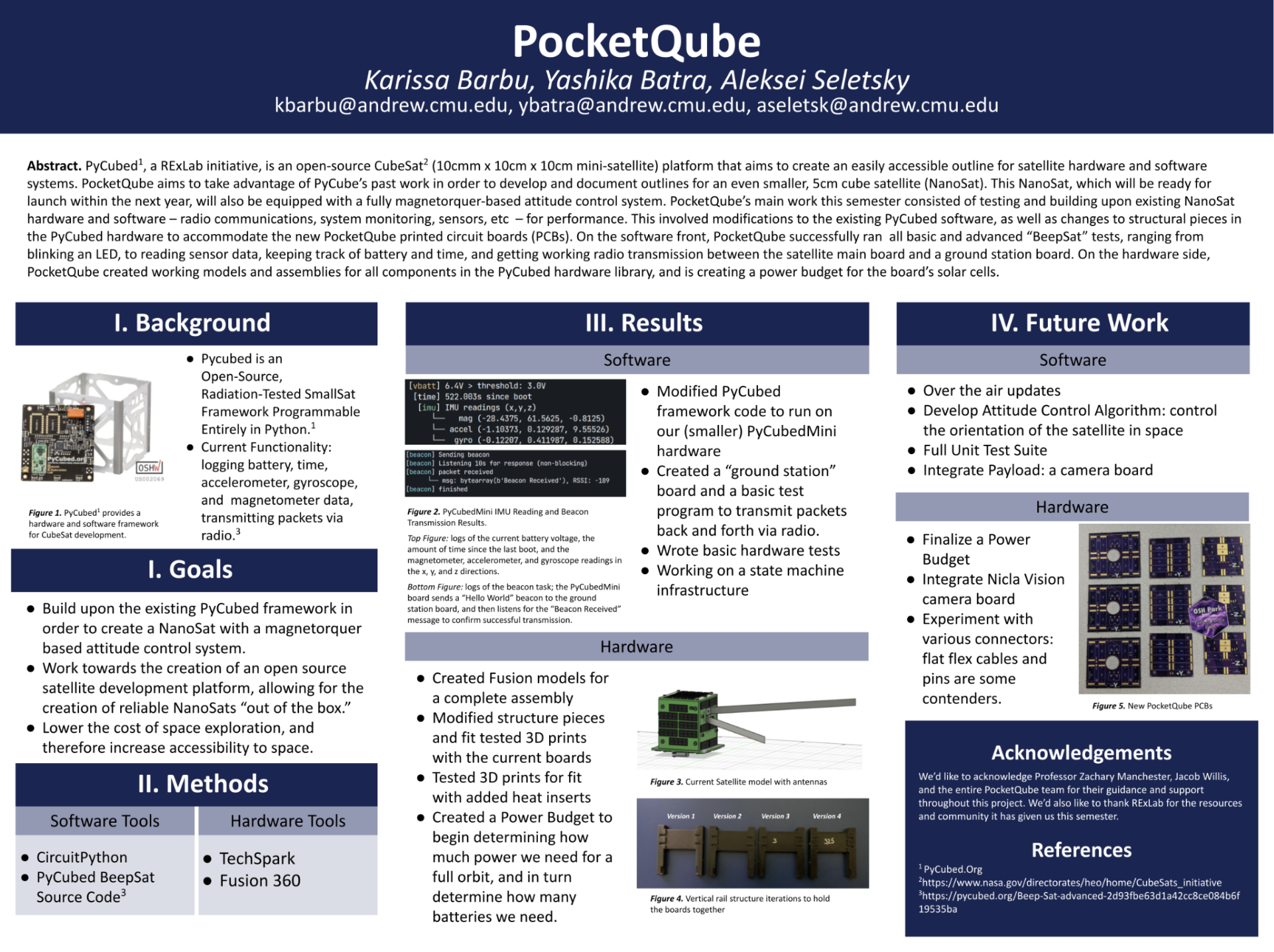

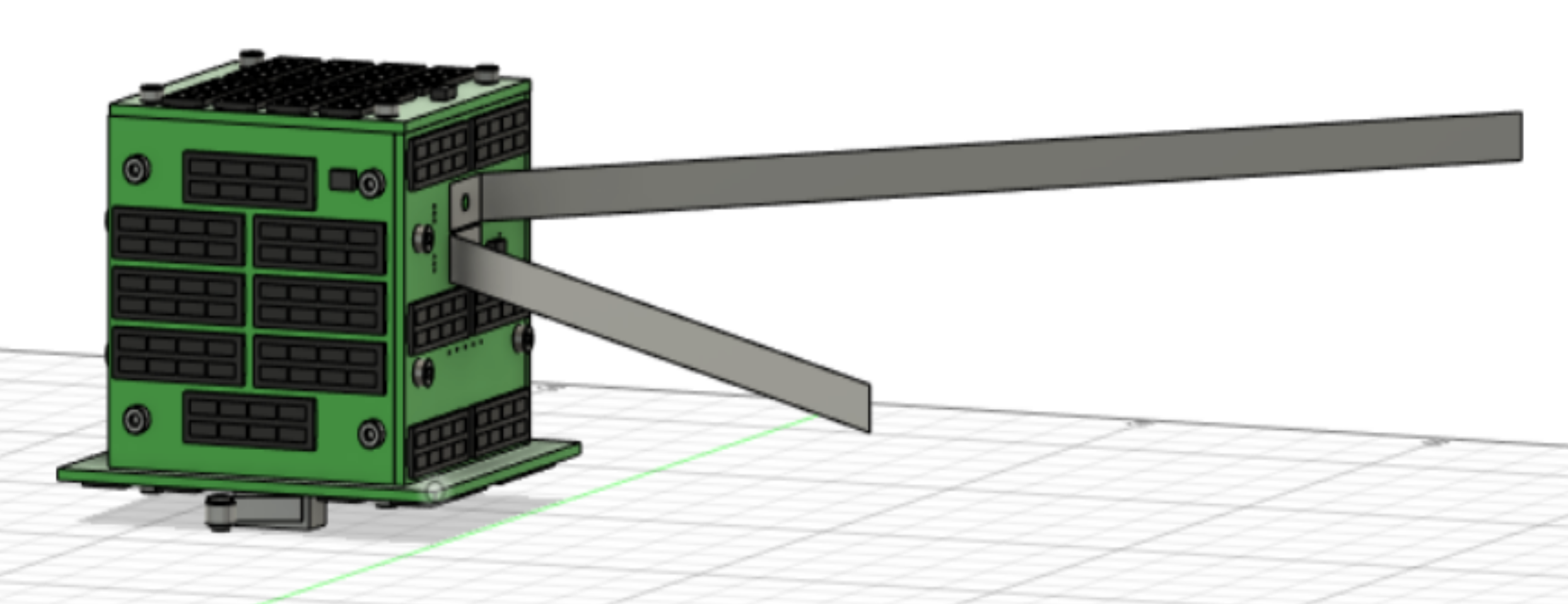

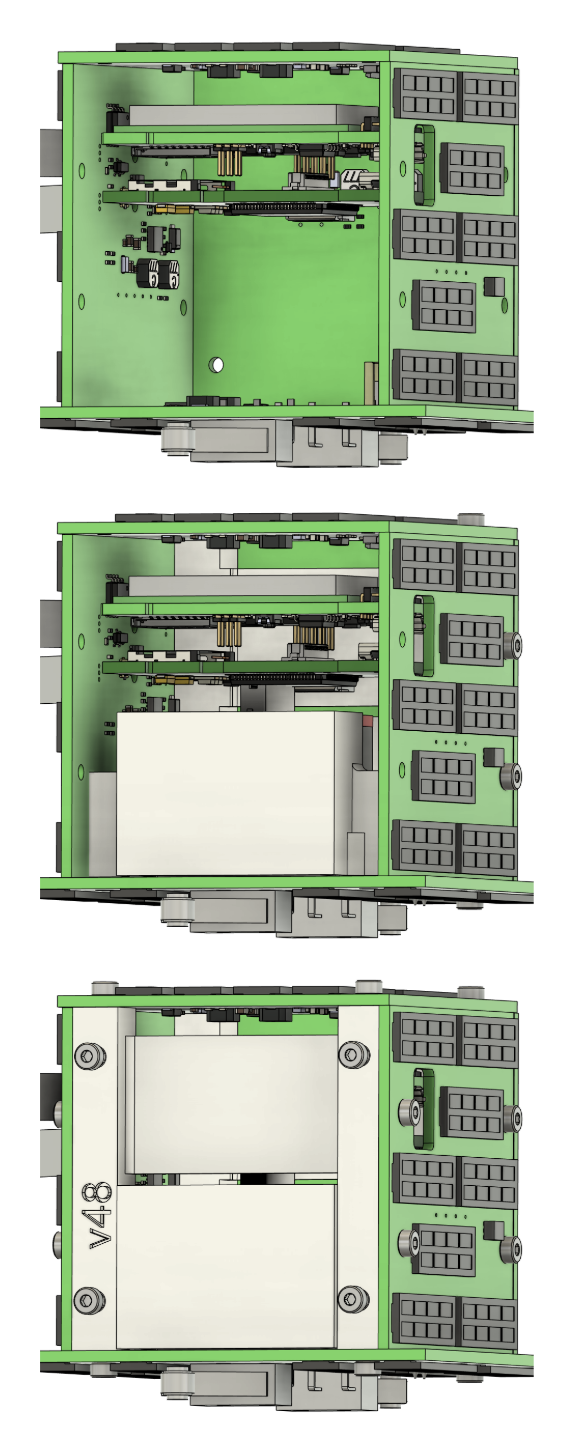

PocketQube

PocketQube

Project Leader: Jacob Willis, Ph.D.

Project Professor: Zachary Manchester, Ph.D.

Developers: Karissa Barbu, Yashika Batra, Aleksei Seletskiy

Project 4

Project 4

Project Leader: Bardienus Duisterhof

Project Professor: Jeffrey Ichnowski, Ph.D.

Research Assistant: Karissa Barbu

Project 5

Project 5

Project Leader: Uksang Yoo

Project Professor: Jeffrey Ichnowski, Ph.D.

Research Assistant: Karissa Barbu

Modified Point Cloud(Right)